Point-SAM: Promptable 3D Segmentation Model for Point Clouds

The development of 2D foundation models for image segmentation has been significantly advanced by the Segment Anything Model (SAM). However, achieving similar success in 3D models remains a challenge due to issues such as non-unified data formats, lightweight models, and the scarcity of labeled data with diverse masks. To this end, we propose a 3D promptable segmentation model Point-SAM focusing on point clouds. Our approach utilizes a transformer-based method, extending SAM to the 3D domain. We leverage part-level and object-level annotations and introduce a data engine to generate pseudo labels from SAM, thereby distilling 2D knowledge into our 3D model. Our model outperforms state-of-the-art models on several indoor and outdoor benchmarks and demonstrates a variety of applications, such as 3D annotation.

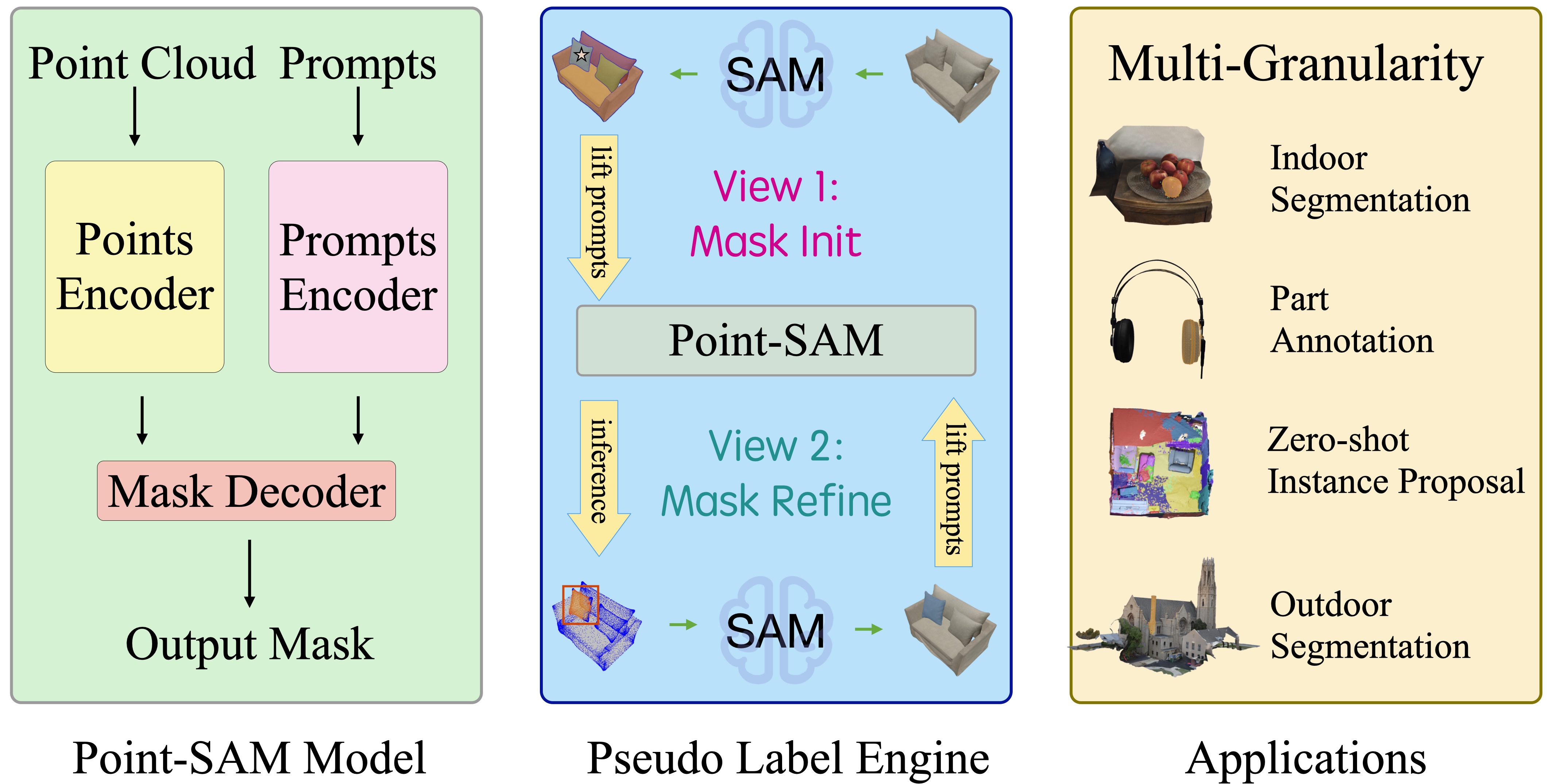

Point-SAM Model The point cloud and prompts are feed into the model, and the model generates the segmentation mask. The point cloud is first grouped by FPS and KNN, then tokenized by a small PointNet. Then the tokens are feed into a ViT model. The prompts are encoded by sin-cos positional encoding and then fused with the ViT's output. Finally, the fused output is decoded into the segmentation mask.

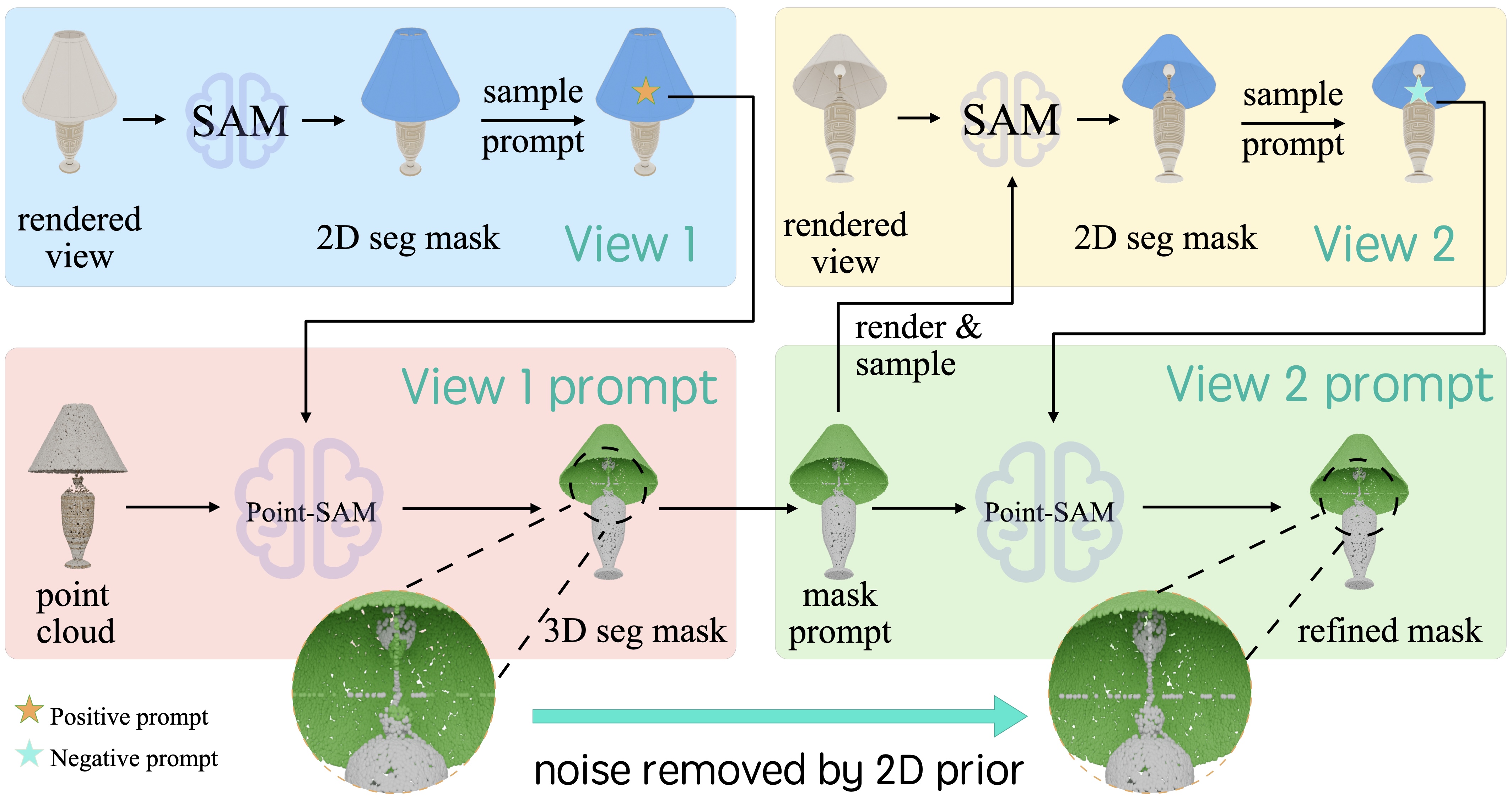

Pseudo Label Engine Initially, a relatively weak Point-SAM is trained on a mixture of existing datasets. Using both pre-trained Point-SAM and SAM, we generate pseudo labels by rendering RGB-D images of each mesh from six fixed camera positions and fusing these into a colored point cloud. For each 2D proposal, a corresponding 3D proposal is identified and refined by lifting a randomly sampled 2D prompt to a 3D prompt, prompting Point-SAM to predict a 3D mask on the fused point cloud, with the process repeated and modified at other views. Details and results are provided in the figure below.

Applications Our model has a variety of applications, such as 3D annotation, where users can provide prompts to guide the model to generate precise segmentation masks. It can be used for diverse data sources, such as indoor and outdoor scenes as well as synthetic data. Our model can also be used for zero-shot instance proposal.

Pseudo label generation and refinement process A weak Point-SAM is trained on a mixture of existing datasets. We then use both pre-trained Point-SAM and SAM to generate pseudo labels. For each mesh, we render RGB-D images from six fixed camera positions and fuse them into a colored point cloud. SAM generates diverse 2D proposals for each view, and for each 2D proposal, we aim to find a corresponding 3D proposal. Starting from the view of the 2D proposal, a randomly sampled 2D prompt is lifted to a 3D prompt, prompting Point-SAM to predict a 3D mask on the fused point cloud. The next 2D prompt is sampled from the error region between the 2D proposal and the projection of the 3D proposal at this view. New 3D prompts and previous 3D proposal masks update the 3D proposal. This process repeats until the IoU between the 2D and 3D proposals exceeds a threshold, ensuring 3D-consistent segmentation regularized by Point-SAM while retaining SAM's diversity. We repeat this process with modifications at other views to refine the 3D proposal further. If the IoU is below a threshold, the 3D proposal is discarded, and the previous 3D proposal mask is used to prompt Point-SAM at each iteration, refining the 3D masks by incorporating 2D priors from SAM through space carving.

We show videos for prompt segmentation. Our demo can be found here. Those objects are out-of-distribution meshes, the first three are downloaded from Objaverse, and the last one is from Polycam which is also an out-door scene with complex geomotry. You can find the example geomotries in the annotation deme GitHub repo.

Video 1: Rhino from Objaverse

Video 2: Transformer from Objaverse

Video 3: Headphone from Objaverse

Video 4: Castle from Polycam

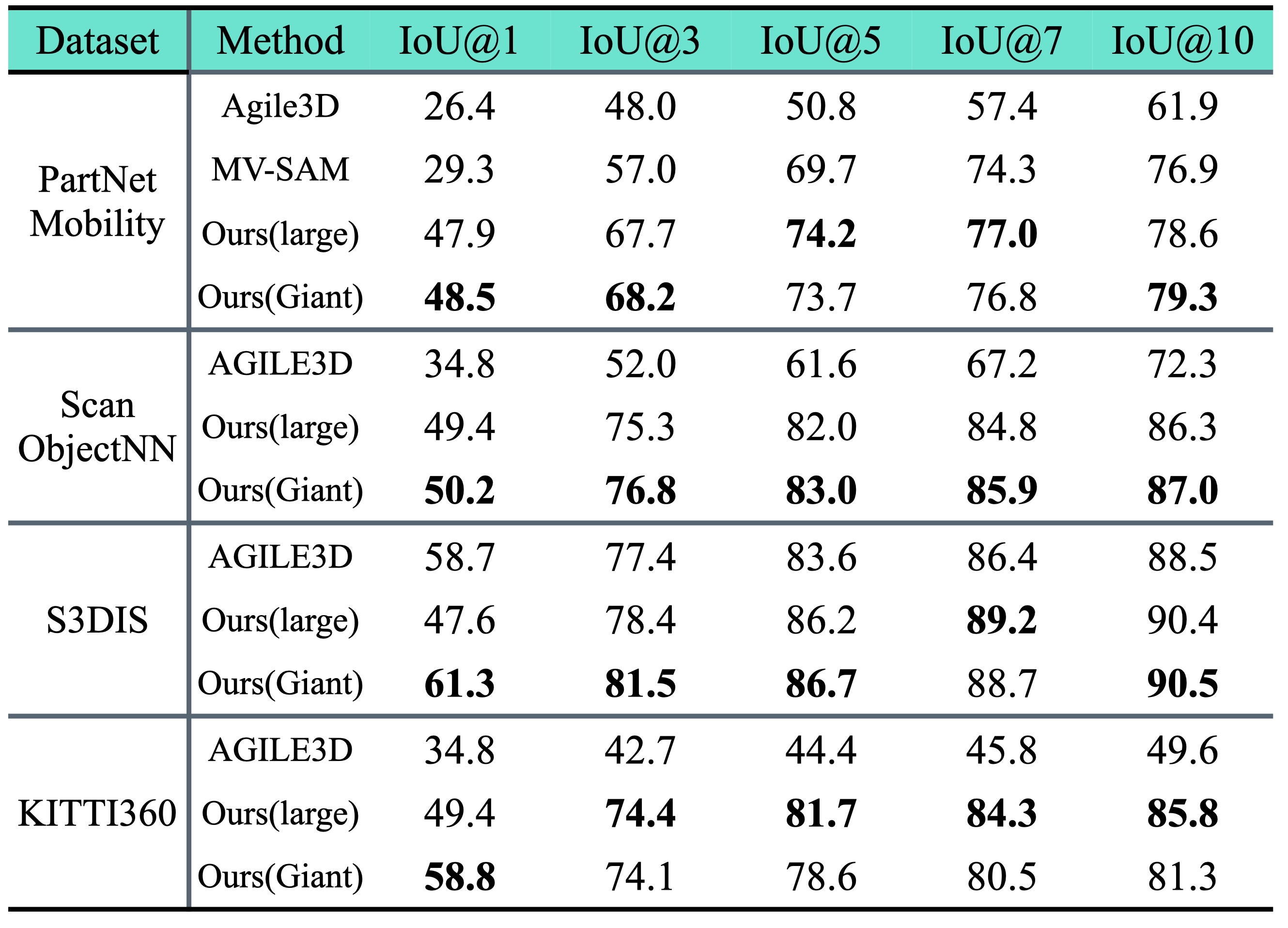

Baseline We compare Point-SAM with a multi-view extension of SAM, named MV-SAM, and a 3D interactive segmentation method, AGILE3D. Inspired by previous works, we introduce MV-SAM for zero-shot point-prompted segmentation as a strong baseline by rendering multi-view RGB-D images from each mesh. SAM is prompted at each view with simulated clicks from the error region center between SAM’s prediction and the 2D ground truth mask, with predictions lifted back to a sparse point cloud and merged into a single mask. For both MV-SAM and our method, the most confident prediction is selected if there are multiple outputs. AGILE3D uses a sparse convolutional U-Net, is trained on real-world scans of ScanNet40, and requires input scale adjustment for CAD models to ensure consistent performance.

Results Point-SAM shows superior zero-shot transferability and effectively handles data with different point counts and sources. It significantly outperforms MV-SAM, especially with few point prompts, demonstrating greater prompt efficiency. SAM struggles with multi-view consistency without extra fine-tuning, particularly with limited prompts. Point-SAM also surpasses AGILE3D across all datasets, notably in out-of-distribution scenarios like PartNet-Mobility and KITTI360, highlighting its strong zero-shot transferability. Figure 4 illustrates the qualitative comparison, showing Point-SAM's superior quality with a single prompt and significantly faster convergence compared to AGILE3D and MV-SAM.

@misc{zhou2024pointsampromptable3dsegmentation,

title={Point-SAM: Promptable 3D Segmentation Model for Point Clouds},

author={Yuchen Zhou and Jiayuan Gu and Tung Yen Chiang and Fanbo Xiang and Hao Su},

year={2024},

eprint={2406.17741},

archivePrefix={arXiv},

primaryClass={cs.CV}

url={https://arxiv.org/abs/2406.17741},

}